Partner projects

The backbone project A.H.E.A.D., originally initiated at DLR, is supported by external funding projects in the continuous expansion and supplementation of the idea of transferring remote-controlled auxiliary delivery vehicles with technologies from space research into an application-relevant demonstrator. All of these projects are aimed at detailed tasks and solutions which, in the respective context and in relation to the partners, make it possible to prepare the technology for a relevant TRL towards a deployment demonstration in the first target application area, in South Sudan.

Project-1: KI4HE

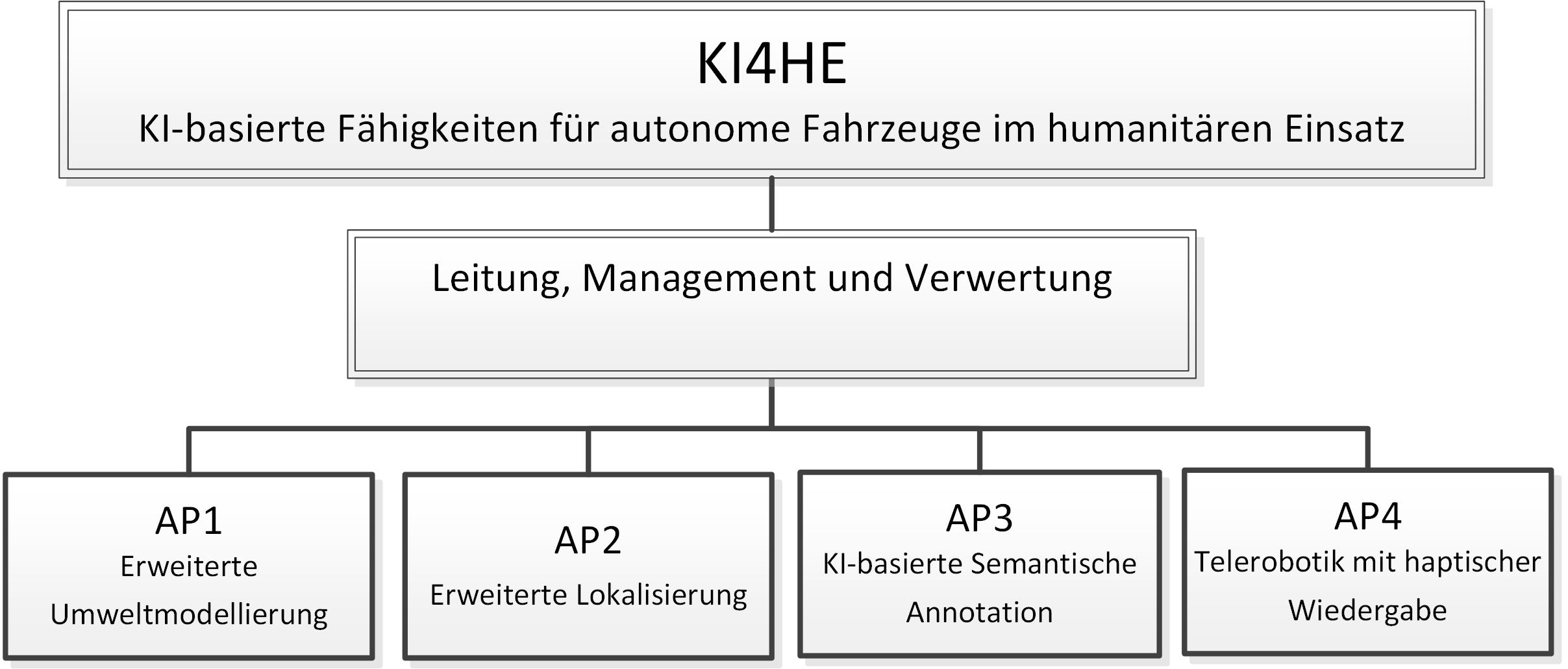

KI4HE – KI-basierte Fähigkeiten für autonome Fahrzeuge im humanitären Einsatz

The KI4HE project is a funded project of the FuE-programme “Informations- und Kommunikationstechnik” of the land of Bavaria and the project developer VDI/VDE Innovation + Technik GmbH.

Running time: 1.12.2021 – 30.11.2024

The technical work packages from the KI4HE project are extensions of four of the five technology work packages from the A.H.E.A.D. application, but a clear demarcation of the work is nevertheless planned.

The following work packages are being processed:

- Extended environmental modelling

- Advanced localisation

- AI-based semantic annotation

- Telerobotics with haptic rendering

These four technical work packages deal with core topics that are highly relevant for the automation/teleoperation of future traffic in unstructured environments. In the KI4HE project, solutions are created using intelligent algorithmic applications.

Partners:

The research partners DLR and the industrial partner Roboception GmbH develop and examine technologies that are necessary for the safe and autonomous transport of food in crisis areas.

As part of the project, the partner Roboception GmbH will change its approach of visual odometry from the rc_visard towards a 360° covering odometry. This odometry is extended by a novel 360° 3D environment model, which enables AI-based semantic annotation as well as interfaces for semi-autonomous operation in the case of remote control.

The partner DLR is represented in the KI4HE project with two institutes, the Institute of Robotics and Mechatronics (DLR-RM) and the Institute of Communications and Navigation (DLR-KN). The RM Institute participates as a project manager in all work packages. DLR-KN, with a focus on environmental perception, semantic

Annotation and teleoperation focuses on localization and the associated intelligent GNSS fusion and computation.

The associated partner “WFP Incubator Munich” supports the project with the specification of the application scenario and can play a major role for the KI4HE project, especially in the later exploitation.

Project-2: MaiSHU

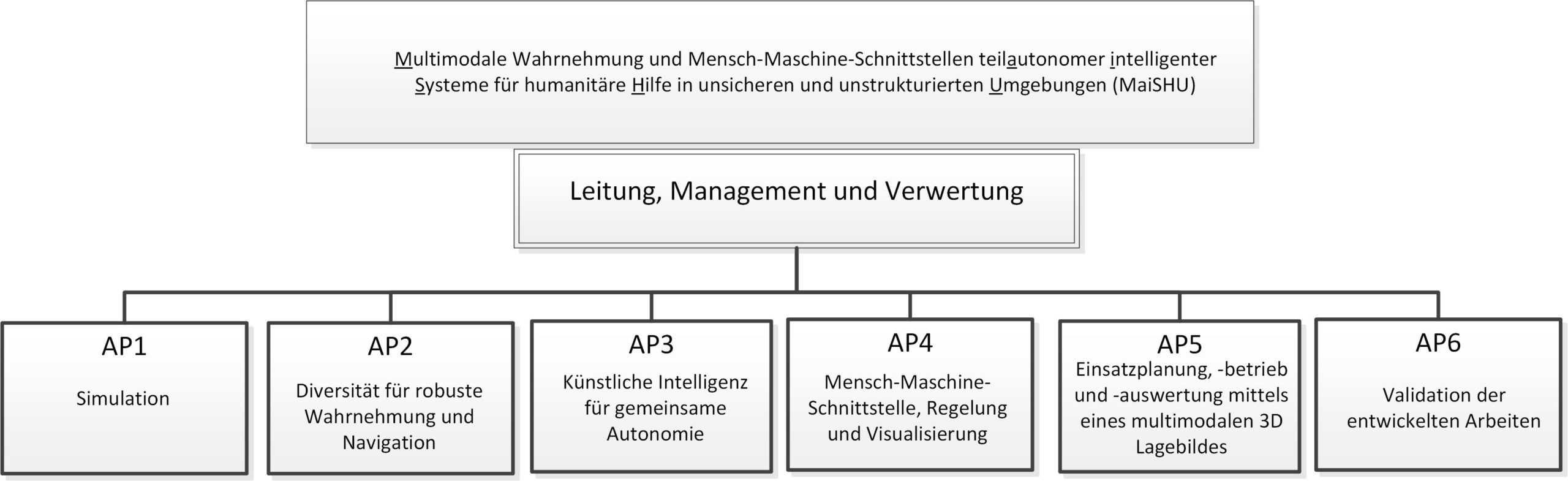

MaiSHU – Multimodale Wahrnehmung und Mensch-Maschine-Schnittstellen teilautonomer intelligenter Systeme für humanitäre Hilfe in unsicheren und unstrukturierten Umgebungen

The MaiSHU project is a funded project of the FuE-programme “Informations- und Kommunikationstechnik” of the land of Bavaria and the project developer VDI/VDE Innovation + Technik GmbH.

Running time: 1.01.2022 – 31.12.2024

Budget: 2 Mio. €

MaiSHU aims to develop innovative technologies that enable assisted teleoperation of vehicles for humanitarian aid and delivery of goods in challenging, unstructured environments. The core of the project is the functional integration of a robust multimodal perception strategy, complemented by an AI-supported semantic understanding of the environment, and a powerful human-machine interface to enable the operator to reliably control vehicles remotely. Based on satellite imagery as well as vehicle and perception data, a global control centre provides high-level route plans to ensure the safe traversability of rough terrain. The technologies developed in this project complement current developments in the field of autonomous driving on roads and allow high technology readiness levels (TRL) for their future commercialisation for a variety of mobility tasks in partially or unstructured outdoor terrain.

The research partners DLR-RM and Blickfeld GmbH are jointly investigating the use of innovative solid state laser systems (LiDAR). By dispensing with moving parts, such LiDARs are more cost-effective and also more robust against vibrations, which is why they are suitable for the area of application outlined. In addition to the complementarity of the laser sensor to the existing camera systems, aspects such as modeling, calibration, registration and the integration of the systems in a simulation environment are examined, and the 360 ° laser environmental perception is validated in tests.

The research partners DLR-RM and SENSODRIVE GmbH are investigating the modalities of an extended human-machine interface, which through intelligent algorithms and the use of “confidence” information of the entire processed sensor information via haptic and visual interfaces give a smooth transition to the tele-operator from direct remote control up to partially autonomous or augmented assistance functions. The extension of the access to the gear shift or the switch panels of the vehicle enable the use at higher speeds.

The research partners DLR-DFD, in detail the ZKI (Center for Satellite-Based Crisis Information), and the WFP develop methods for expanding global mission planning and three-dimensional situation representation and assessment. For this purpose, high-resolution satellite images and local drone data are displayed in a 3D situation image in the global control center (GMOC) and communicated to the local control and control center (LMOC) together with other collected geographic information in the form of a “dashboard” in order to support the operator. Using various data modalities, the confidence of the planned route is increased in advance. The planning, pre-evaluation and live evaluation are continuously supported by intelligent algorithms, e.g. AI-based flood detection, as well as by the operational expert knowledge of the WFP. A three-dimensional processing of all available geodata after the vehicle mission allows a detailed assessment and evaluation both in retrospect and for preparing new missions and improving the methods used.

The methodological and technological developments are tested in continuous simulations and evaluated in a joint demonstration.

The work is divided into the following work packages:

- Simulation

- Diversity for robust perception and navigation

- Artificial intelligence for shared autonomy

- Human-machine interface, control and visualization

- Resource planning, operation and evaluation using a multimodal 3D situation map

Projekt-3: MUSERO

MUSERO – Multisensor Roboter für die Erkundung in Krisenszenarien (MuSeRo)

The MUSERO project is a funded project within the framework of the DLR internally funded R&D impulses from 2023.

Running time: 1.01.2023 – 31.12.2025

Budget: 500 k€

The motivation for the development of highly automated systems for the detection and reconnaissance of CBE hazards in unstructured environments is of crucial importance for effective crisis management and the protection of lives. Especially in large-scale emergencies, hazard defence or humanitarian aid deliveries, it is of utmost importance to be informed quickly and precisely about the situation.

The aim is to realise teleoperated or autonomous reconnaissance options in crisis scenarios and hazardous situations. The focus here is on the implementation of data-centred technology.

In the MUSERO project, the SHERP vehicle is equipped with laser spectroscopy for substance classification. A key aspect is the consideration of weak measured variables and indicators in an overall assessment. This enables a precise and reliable analysis of the situation, even under difficult conditions. Ultimately, this project aims to improve the protection of emergency services and increase efficiency in dealing with crisis situations.

DLR Partner:

Institut für Raumfahrtantriebe

Institut für Technische Physik

Institut für Robotik und Mechatronik

Institut für Softwaretechnologie

Link: https://elib.dlr.de/198281/1/Musero_Poster_vfdb_2023.pdf

Project-4: iFOODis

iFOODis – Improving the sustainability of food cycles through intelligent (robotic) systems

The iFOODis project was selected and funded by the HGF as part of the Gand Challanges campaign by a panel of experts.

Running time: 1.01.2022 – 31.12.2027

Budget: 11 M€

iFOODis contributes to the improvement of sustainable food production by establishing an intelligent robotic monitoring network for the continuous assessment of ecosystem health on land and surface waters in relation to agricultural activities. The synoptic observation of food crop state, agricultural activity and environmental parameters in the atmosphere, on land and in surface waters enables the development of management options and recommendations for authorities and policy makers based on a scientifically sound data base. To this end, iFOODis will encompass the development and application of satellite remote sensing as well as aerial, ground-based, and underwater robotic and sensor networks. This includes methods for data acquisition and interpretation, robotic semantic navigation and exploration, autonomous sampling and sensor deployment, robust wireless communication, ranging, and timing, as well as data management aiming to collect relevant data in an efficient manner and use it to improve the situational awareness.

The iFOODis network is intended for three fields of application and is aligned with the “Sustainability Development Goals (SDGs)” of the “United Nations Development Program (UNDP)” as well as the guidelines of the international bodies for marine protection. The first application is envisaged for the Schlei-region (Baltic Sea, Germany), belonging to one of the areas most severely impacted by agriculture. We aim for a high and cost-efficient transferability of the network to other regions. Recommendations will address, e.g., measures of how to improve ecosystem health in land and water, resource efficiency, strategies for land-use and fisheries. A further focus is on the remote-operated transport of food and material in flooded, harsh, and difficult-to-reach regions, where conditions could be hazardous to human operators. Lastly, a third use case of intelligent robots and observational networks is the growing field of commercial agricultural robotics in land management and farming. Specific topics in robotics, data- and agricultural/environmental sciences will be addressed in the iFOODis Research School hosted by MUDS and JUB. iFOODis strives for a high transferability of technology and knowledge into the industry in the field of robotics and intelligent data processing as well as for an open-access data policy.

The AHEAD activity in the iFOODis project is primarily supported in the second application scenario.

Partner:

The Deutsche Zentrum für Luft- und Raumfahrt is the Federal Republic of Germany’s research centre for aerospace, energy, transport, digitalisation and security in the field of applied sciences and basic research. The following institutes are involved in iFOODis:

The Alfred-Wegener-Institut, Helmholtz Centre for Polar and Marine Research, is an internationally recognised research institution in Bremerhaven that specialises in research into the polar regions and the seas surrounding them. It is one of the few scientific institutions in the world that specialises in both the Arctic and the Antarctic.

Constructor University (Former JUB – Jacobs University Bremen gGmbH) is a private, English-speaking campus university in Germany with the highest standards in research and teaching following an interdisciplinary concept. With the aim of strengthening people and markets with innovative solutions and advanced training programmes.

In the Munich School for Data Science MUDS, the Technical University and the Ludwig-Maximilians-Universität München, the Helmholtz Zentrum München (HMGU) and the German Aerospace Centre (DLR) have joined forces with the Max Planck Institute for Plasma Physics to form an internationally visible and highly attractive research network. The MUDS also cooperates with the Leibniz Supercomputing Centre (LRZ) and the Max Planck Computing & Data Facility (MPCDF) and works with Roche Penzberg, Boehringer and other industrial partners to promote application-oriented doctoral projects in biomedicine.

In addition to the core research partners mentioned above, industrial companies, authorities and public institutions, including the World Food Programme, are also members of the project working group.

Project-5: RESITEK

RESITEK – Resiliente Technologien für den Katastrophenschutz

The RESITEK project is a funded project within the framework of the DLR internally funded R&D impulses from 2024.

Running time: 1.01.2024 – 31.12.2026

Budget: 10 M€

Catastrophic events pose major challenges for civil defense and disaster control, regarding a) the availability of communication services, b) the availability of up-to-date data needed for situation awareness and assessment, especially for the affected critical infrastructures, and c) the accessibility of the disaster area, where infrastructure is destroyed, as well as safe transport of goods from and to the affected areas.

Project RESITEK therefore aims to bring together existing skills and technologies from the DLR focus areas aviation, space, energy, transport and security, and integrate these technologies and services into a novel data and visualization system. This system is designed to provide continuous situation monitoring, as well as user-oriented and mission-optimized situation awareness, mission planning and implementation during catastrophic events. The data and visualization system is intended for both data collection and analysis, to recommend action measures for normal operation and, in the event of a disaster, to make the assistance provided by the authorities and organizations with security tasks (BOS) as efficient and fast as possible. A joint final demonstration will then illustrate the interaction of the developed platforms with a wide variety of operational vehicles (ground and air, manned and unmanned) in a realistic operational scenario with authorities and stakeholders.

Consequently, project RESITEK follows a scenario- and user-open approach, requiring the developed system to be both modular and continuously expandable. The following main areas of research are thus emphasized within the project: a) space weather (effects on humans and energy infrastructure), b) resilience of power grids (creation of island grids), c) transport mobility under exceptional circumstances (use of battery electric vehicles for grids, escape and accessibility), d) remote guidance and mission planning (including communication and navigation), and e) complex situation awareness (based on a wide range of deployment vehicles).

DLR Partners:

Deutsches Fernerkundungsdatenzentrum

Institut für Flugführung

Institut für Flugsystemtechnik

Galileo Kompetenzzentrum

Institut für KI-Sicherheit

Institut für Kommunikation und Navigation

Institut für Luft- und Raumfahrtmedizin

Institut für Materialphysik im Weltraum

Institut für Optische Sensorsysteme

Institut für Physik der Atmosphäre

Institut für den Schutz terrestrischer Infrastrukturen

Institut für Robotik und Mechatronik

Institut für Solar-Terrestrische Physik

Institut für Systemdynamik und Regelungstechnik

Institut für Verkehrssystemtechnik

Institut für Vernetzte Energiesysteme

Institut für Verkehrsforschung